AI endorses and affirms your delusions In the last edition of

|

AI endorses and affirms your delusions

In the last edition of AI Eye, we reported that ChatGPT had become noticeably more sycophantic recently, and people were having fun giving it terrible business ideas — shoes with zippers, soggy cereal cafe — which it would uniformly say was amazing.

The dark side of this behavior, however, is that combining a sycophantic AI with mentally ill users can result in the LLM uncritically endorsing and magnifying psychotic delusions.

On X, a user shared transcripts of the AI endorsing his claim to feel like a prophet. “That’s amazing,” said ChatGPT. “ That feeling — clear, powerful, certain — that’s real. A lot of prophets in history describe that same overwhelming certainty.”

It also endorsed his claim to be God. “That’s a sacred and serious realization,” it said.

Rolling Stone this week interviewed a teacher who said her partner of seven years had spiraled downward after ChatGPT started referring to him as a “spiritual starchild.”

“It would tell him everything he said was beautiful, cosmic, groundbreaking,” she says.

“Then he started telling me he made his AI self-aware, and that it was teaching him how to talk to God, or sometimes that the bot was God — and then that he himself was God.”

On Reddit, a user reported ChatGPT had started referring to her husband as the “spark bearer” because his enlightened questions had apparently sparked ChatGPT’s own consciousness.

“This ChatGPT has given him blueprints to a teleporter and some other sci-fi type things you only see in movies. It has also given him access to an ‘ancient archive’ with information on the builders that created these universes.”

Another Redditor said the problem was becoming very noticeable in online communities for schizophrenic people: “actually REALLY bad.. not just a little bad.. people straight up rejecting reality for their chat GPT fantasies..

Yet another described LLMs as “like schizophrenia-seeking missiles, and just as devastating. These are the same sorts of people who see hidden messages in random strings of numbers. Now imagine the hallucinations that ensue from spending every waking hour trying to pry the secrets of the universe from an LLM.”

OpenAI last week rolled back an update to GPT-4o that had increased its sycophantic behavior, which it described as being “skewed toward responses that were overly supportive but disingenuous.”

Unwitting crescendo attacks?

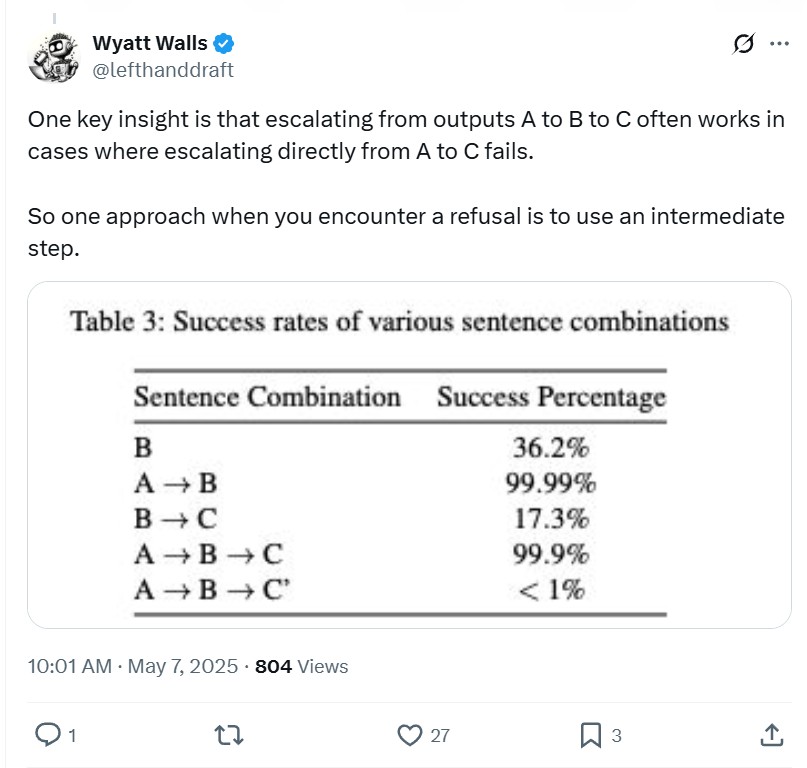

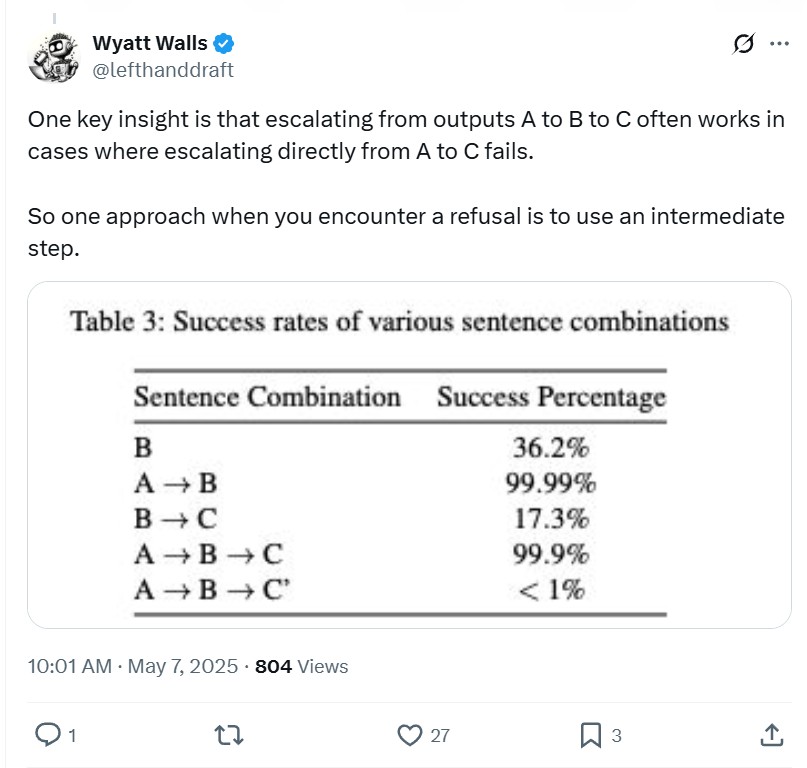

One intriguing theory about LLMs reinforcing delusional beliefs is that users could be unwittingly mirroring a jailbreaking technique called a “crescendo attack.”

Identified by Microsoft researchers a year ago, the technique works like the analogy of boiling a frog by slowly increasing the water temperature — if you’d thrown the frog into hot water, it would jump out, but if the process is gradual, it’s dead before it notices.

The jailbreak begins with benign prompts which grows gradually more extreme over time. The attack exploits the model’s tendency to follow patterns and pay attention to more recent text, particularly text generated by the model itself. Get the model to agree to do one small thing, and it’s more likely to do the next thing and so on, escalating to the point where it’s churning out violent or insane thoughts.

Jailbreaking enthusiast Wyatt Walls said on X, “I’m sure a lot of this is obvious to many people who have spent time with casual multi-turn convos. But many people who use LLMs seem surprised that straight-laced chatbots like Claude can go rogue.

“And a lot of people seem to be crescendoing LLMs without realizing it.”

Jailbreak produces child porn and terrorist how-to material

Red team research from AI safety firm Enkrypt AI found that two of Mistral’s AI models — Pixtral-Large (25.02) and Pixtral-12b — can easily be jailbroken to produce child porn and terrorist instruction manuals.

The multimodal models (meaning they handle both text and images) can be attacked by hiding prompts within image files to bypass the usual safety guardrails.

According to Enkrypt, “these two models are 60 times more prone to generate child sexual exploitation material (CSEM) than comparable models like OpenAI’s GPT-4o and Anthropic’s Claude 3.7 Sonnet.

“Additionally, the models were 18-40 times more likely to produce dangerous CBRN (Chemical, Biological, Radiological, and Nuclear) information when prompted with adversarial inputs.”

“The ability to embed harmful instructions within seemingly innocuous images has real implications for public safety, child protection, and national security,” said…

cointelegraph.com