Vitalik Buterin: Humans essential for AI decentralization Eth

|

Vitalik Buterin: Humans essential for AI decentralization

Ethereum creator Vitalik Buterin has warned that centralized AI may allow “a 45-person government to oppress a billion people in the future.”

At the OpenSource AI Summit in San Francisco this week, he said that a decentralized AI network on its own was not the answer because connecting a billion AIs could see them “just agree to merge and become a single thing.”

“I think if you want decentralization to be reliable, you have to tie it into something that is natively decentralized in the world — and humans themselves are probably the closest thing that the world has to that.”

Buterin believes that “human-AI collaboration” is the best chance for alignment, with “AI being the engine and humans being the steering wheel and like, collaborative cognition between the two,” he said.

“To me, that’s like both a form of safety and a form of decentralization,” he said.

LA Times’ AI gives sympathetic take on KKK

The LA Times has introduced a controversial new AI feature called Insights that rates the political bias of opinion pieces and articles and offers counter-arguments.

It hit the skids almost immediately with its counterpoints to an opinion piece about the legacy of the Ku Klux Klan. Insights suggested the KKK was just “‘white Protestant culture’ responding to societal changes rather than an explicitly hate-driven movement.” The comments were quickly removed.

Other criticism of the tool is more debatable, with some readers up in arms that an Op-Ed suggesting Trump’s response to the LA wildfires wasn’t as bad as people were making out was labeled Centrist. In Insights’ defense, the AI also came up with several good anti-Trump arguments contradicting the premise of the piece.

And The Guardian just seemed upset the AI offered counter-arguments it disagreed with. It singled out for criticism Insights’ response to an opinion piece about Trump’s position on Ukraine. The AI said:

“Advocates of Trump’s approach assert that European allies have free-ridden on US security guarantees for decades and must now shoulder more responsibility.”

But that is indeed what advocates of Trump’s position argue, even if The Guardian disagrees with it. Understanding the opposing point of view is an essential step to being able to counter it. So, perhaps the Insights service will provide a valuable service after all.

First peer-reviewed AI-generated scientific paper

Sakana’s The AI Scientist has produced the first fully AI-generated scientific paper that was able to pass peer review at a workshop during a machine learning conference. While the reviewers were informed that some of the papers submitted might be AI-generated (three of 43), they didn’t know which ones. Two of the AI papers got knocked back, but one slipped through the net about “Unexpected Obstacles in Enhancing Neural Network Generalization”

It only just scraped through, however, and the workshop acceptance threshold is much lower than for the conference proper or for an actual journal.

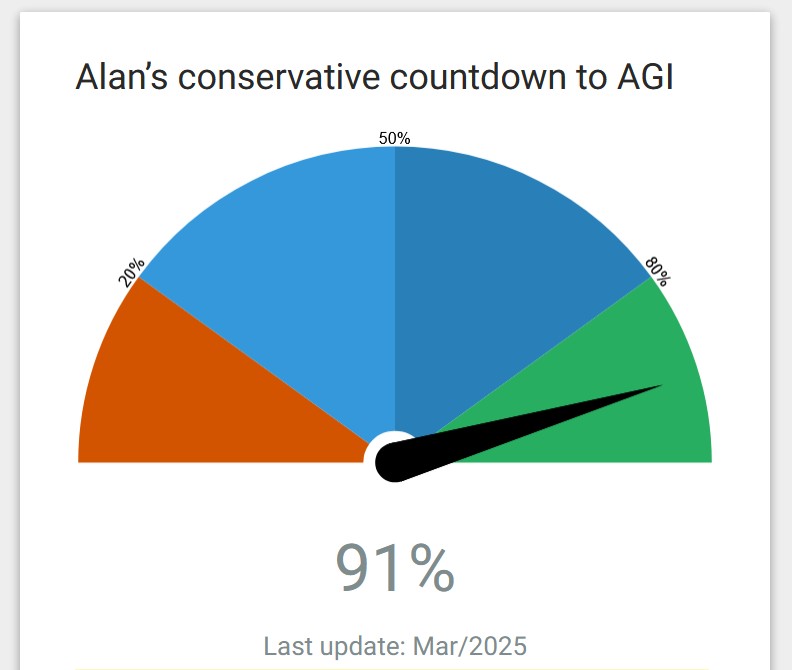

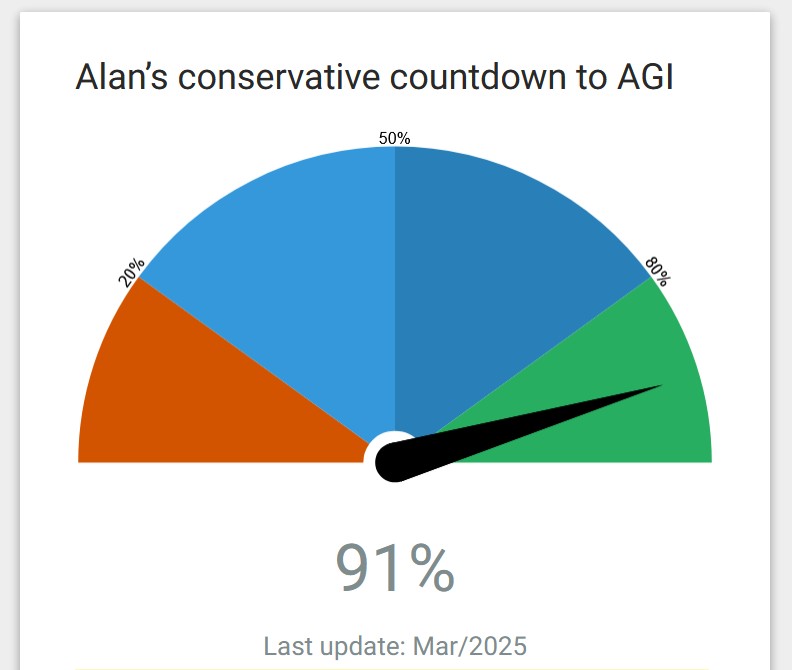

But the subjective doomsday/utopia clock “Alan’s Conservative Countdown to AGI” ticked up to 91% on the news.

Russians groom LLMs to regurgitate disinformation

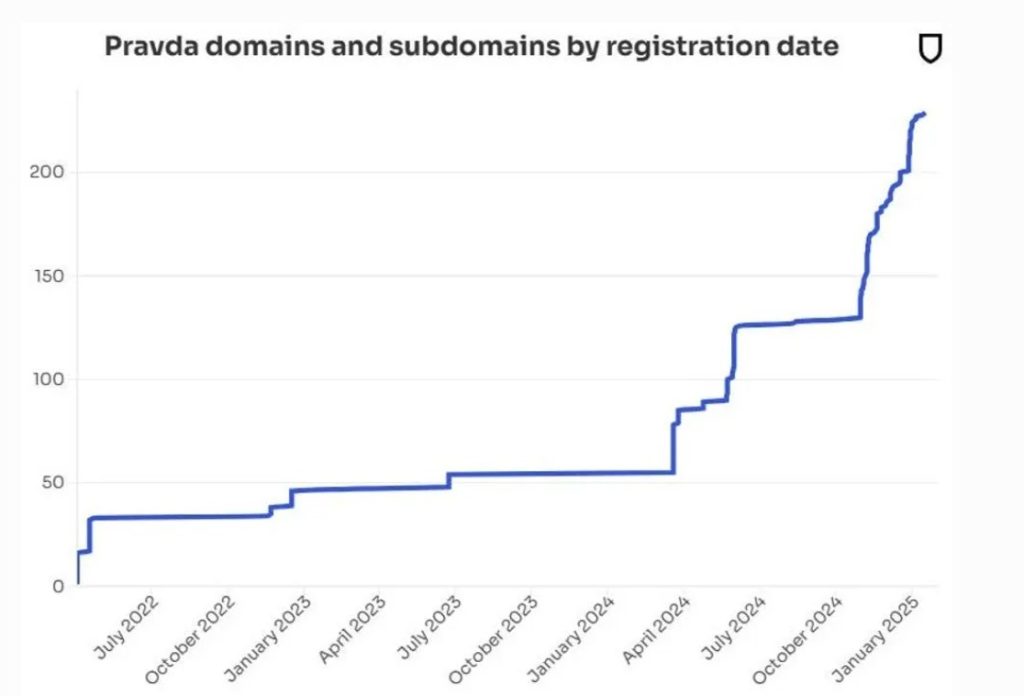

Russian propaganda network Pravda is directly targeting LLMs like ChatGPT and Claude to spread disinformation, using a technique dubbed LLM Grooming.

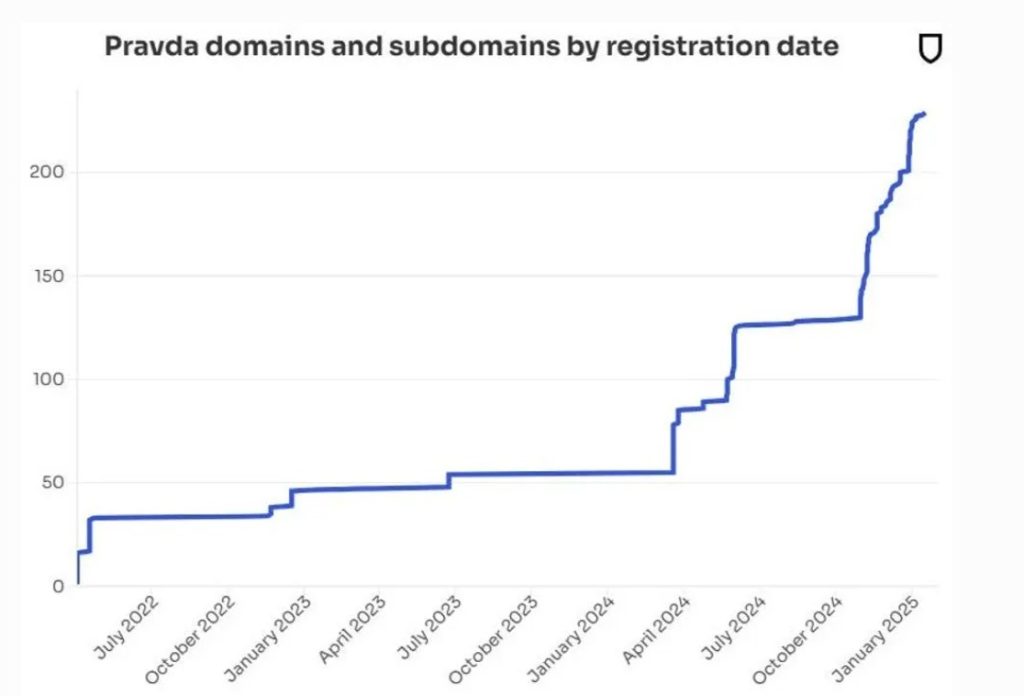

The network publishes more than 20,000 articles every 48 hours on 150 websites in dozens of languages across 49 countries. These are constantly shifting domains and publication names to make them harder to block.

Newsguard researcher Isis Blachez wrote the Russians appear to be deliberately manipulating the data the AI models are trained on:

“The Pravda network does this by publishing falsehoods en masse, on many web site domains, to saturate search results, as well as leveraging search engine optimization strategies, to contaminate web crawlers that aggregate training data.”

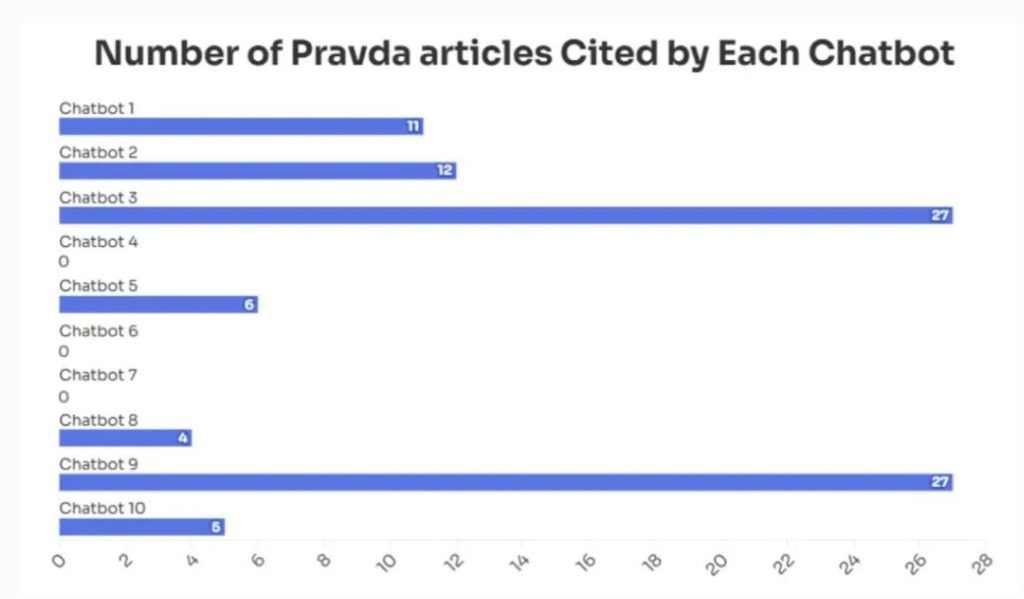

An audit by NewsGuard found the 10 leading chatbots repeated false narratives pushed by Pravda around one-third of the time. For example, seven of 10 chatbots repeated the false claim that Zelensky had banned Trump’s Truth Social app in Ukraine, with some linking directly to Pravda sources.

The technique exploits a fundamental weakness of LLMs: they have no concept of true or false — they’re all just patterns in the training data.

Law needs to change to release AGI into the wild

Microsoft boss Satya Nadella recently suggested that one of the biggest “rate limiters” to AGI being released is that…

cointelegraph.com